Neo-Nazis, white supremacists, and antigovernment groups – racially/ethnically motivated violent extremists, aka REMVE – are discussing and posting about AI use on the main social media platforms that they favor. Privately and openly, they are talking about and testing and using AI, in addition to developing their own versions and recruiting engineers and teams for special projects, including for breaking into banks.

The most troubling examples found by the MEMRI Domestic Terrorism Threat Monitor (DTTM) research team in its work studying this topic involve extremists actually discussing the use of AI for planning terror attacks, including making weapons of mass destruction. One accelerationist group which seeks to bring about the total collapse of society recently conducted, in a Facebook group, a conversation about trying to trick an AI chat bot into providing details for making mustard gas and napalm. These and other examples are detailed in a new MEMRI DTTM report to be released later this month.

Also in the report is the leader of a designated terrorist entity – a former U.S. government contractor who frequently calls for attacks inside the U.S. from his safe haven in Russia – who published suggestions generated by ChatGPT for engaging in guerilla warfare and about critical infrastructure in the U.S. that would be most vulnerable to attack. ChatGPT said that the most vulnerable U.S. infrastructure was "the electrical grid."

Others are talking about using AI to plan armed uprisings to overthrow the current U.S. governmental system, and sharing their AI-created versions of U.S. flags, military uniforms, and graphic designs of the White House. They also discuss AI's use for recruitment and for spreading their ideology and propaganda online, including with videos they create. Deepfake videos showing Hitler delivering a speech in English and Harry Potter actress Emma Watson reading Hitler's Mein Kampf aloud have gone viral on their platforms.

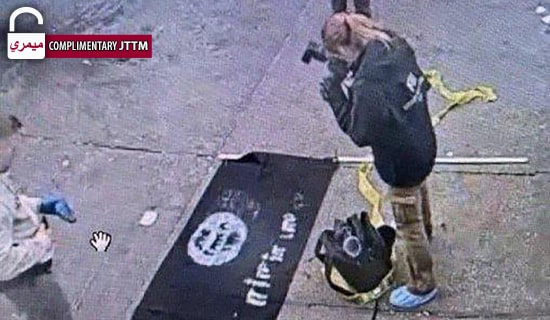

MEMRI research has already found jihadi terrorists talking about using AI for developing weapons systems, including drones and self-driving car bombs. As I wrote in Newsweek in March, a popular user on an ISIS-operated Rocket.Chat server revealed in December 2021 that he had asked ChatGPT for advice on supporting the caliphate, and shared the results. In late March, ISIS supporters discussed using ChatGPT for coding and building software for hacking and encryption, concluding that "2023 will be a very special year for hackers, thanks to chatbots and AI programs."

As early as March 2016, Microsoft released the AI chatbot Tay, but shut it down within 24 hours after it was manipulated into tweeting pro-Hitler messages. Nevertheless, seven years later, the danger posed by neo-Nazi and white supremacist use of AI has only increased. On the Hello History chat app, released in early January 2023 and downloaded over 10,000 times on Google Play alone, users can chat with simulated versions of historical figures, including Adolf Hitler and Nazi propagandist Joseph Goebbels.

As AI technology races ahead – total global corporate investments in AI reached nearly $94 billion in 2021 – many companies involved in its development are laying off their ethicists, who examine algorithms to prevent the type of extremism on the platforms that are cited in this article.

The news of these firings comes as over 2,000 tech leaders and researchers demanded safety protocols and called on the industry to "immediately pause for at least six months" the training of ever more advanced AI systems, citing "profound risks to society and humanity." To top this off, on May 1, artificial intelligence pioneer and "godfather" Geoffrey Hinton quit Google, where he had become one of the most respected voices in the field, so he could freely speak out about the dangers of AI.

In response to the ongoing backlash, Sam Altman, CEO of ChatGPT creator OpenAI, explained in an interview that "time" is needed "to see how [the technology is] used," that "we're monitoring [it] very closely," and that "we can take it back" and "turn things off." Altman's claims serve the AI industry.

Nevertheless, at his testimony on May 16 before the Senate subcommittee hearing about oversight of AI, Altman stressed the need for regulation and said that the industry wanted to work with governments – but how exactly that would happen was not clear. Prior to the hearing, Altman had private meetings with key lawmakers following the launch of ChatGPT in November 2022, and this evening he is scheduled to attend a dinner with members of Congress.

The neo-Nazi and white supremacist focus on AI, here in the U.S. and worldwide, is a national security threat that is not even mentioned in recent warnings about AI. While extremists hope AI will help "wreak havoc" – per a recent post in an accelerationist chat – it is not known whether U.S. and Western counterterrorism agencies have even begun to address this threat.

The closest acknowledgement to recognizing the danger came in the first week of May when President Biden brought together tech leaders involved in AI who were warning of the potential "enormous danger" it poses, while promising to invest millions on advancing research about it.

Earlier, in April, Cybersecurity and Infrastructure Security Agency director Jen Easterly warned, in a panel discussion, about the dangers of this technology and of access to it: "We are hurtling forward in a way that I think is not the right level of responsibility, implementing AI capabilities in production, without any legal barriers, without any regulation." And Senator Chris Murphy recently warned about AI: "We made a mistake by trusting the technology industry to self-police social media... I just can't believe that we are on the precipice of making the same mistake."

Whether the call to halt AI development has come in time remains to be seen. But regardless, this private industry should not be operating without government oversight. It is clear is that the industry needs to follow standards and create guidelines to examine issues like this – moves that have still not been made for terrorist use of social media. While the National Institute of Standards and Technology has reportedly developed frameworks for responsibility in AI use, no one seems to know about them.

*Steven Stalinsky, Ph.D., is Executive Director of MEMRI.